New Poll Shows That People Don’t Trust AI-Generated Health Information

Updated on May 31, 2025

Artificial intelligence (AI) is already helping to detect breast cancer earlier, and may soon help doctors to better predict a person’s breast cancer risk. But when it comes to AI-generated health information, studies have found that inaccuracies are common — something that Americans have picked up on, according to a poll from KFF.

To find out more about how U.S. adults use AI to answer health questions and whether or not they trust those answers, researchers with the nonprofit health policy organization surveyed 2,428 Americans — in English and Spanish — last June. Most people surveyed said they've used or interacted with AI at least once before. About one-third of all the adults surveyed (age 18+) and nearly half of younger adults (ages 18–29) say they use or interact with AI several times a week.

People aren’t sure if they can separate fact from fiction

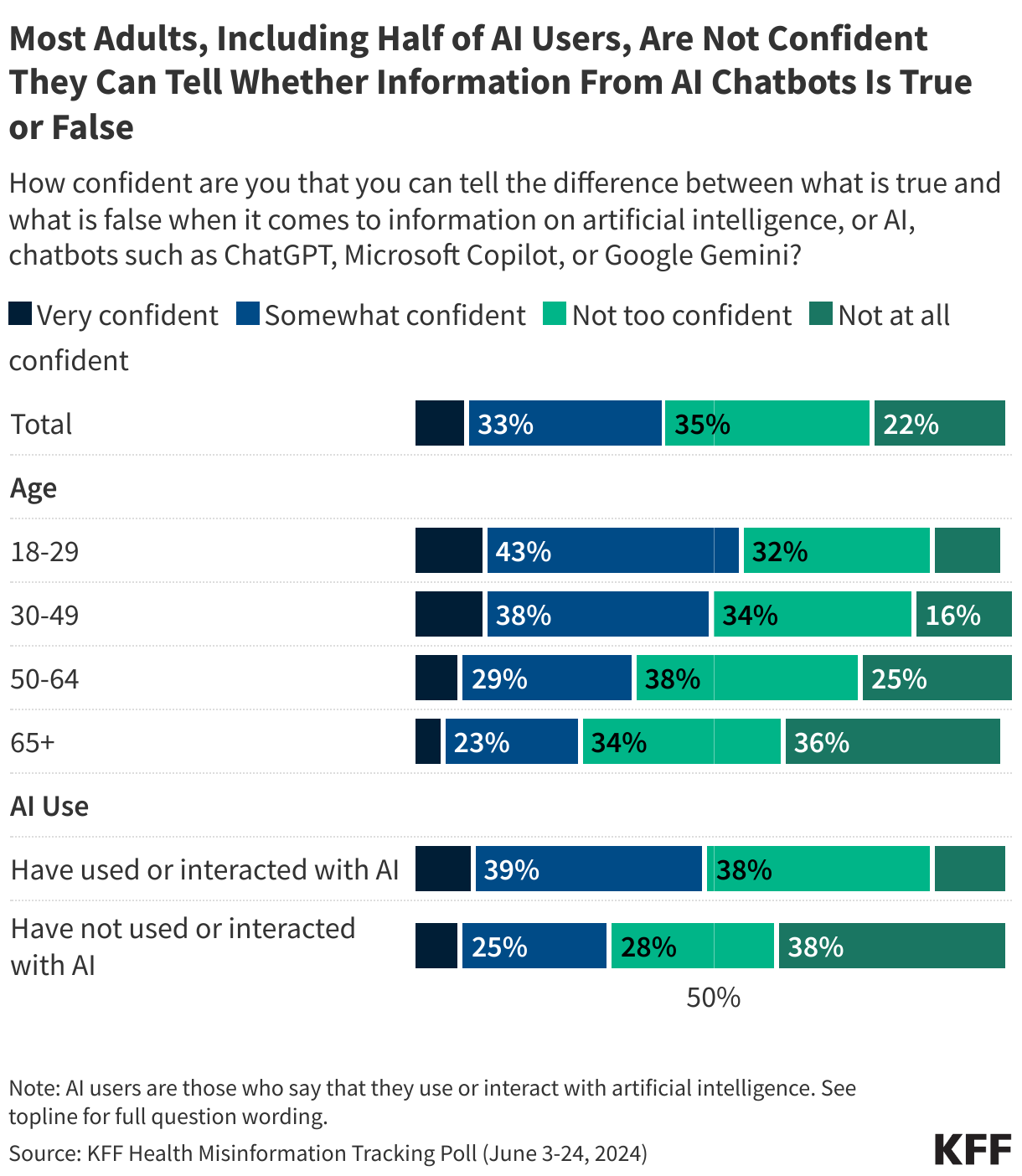

Despite AI use, most people say they're not sure they can tell the difference between what's true and what's false when it comes to answers provided by AI chatbots.

Only 9% say they feel very confident and 33% say they feel somewhat confident they can tell the difference between true or false answers from AI. This can make it hard to combat AI misinformation.

Distrust in Dr. Chatbot

For every six people surveyed for the poll, one said they use AI chatbots at least once a month to answer health questions and seek medical advice. That number was even higher for adults under the age of 30 years; one in four asked AI chatbots to answer health questions at least once a month. But research shows that AI tools are regularly wrong when diagnosing medical conditions and can’t produce relevant sources to back up their answers.

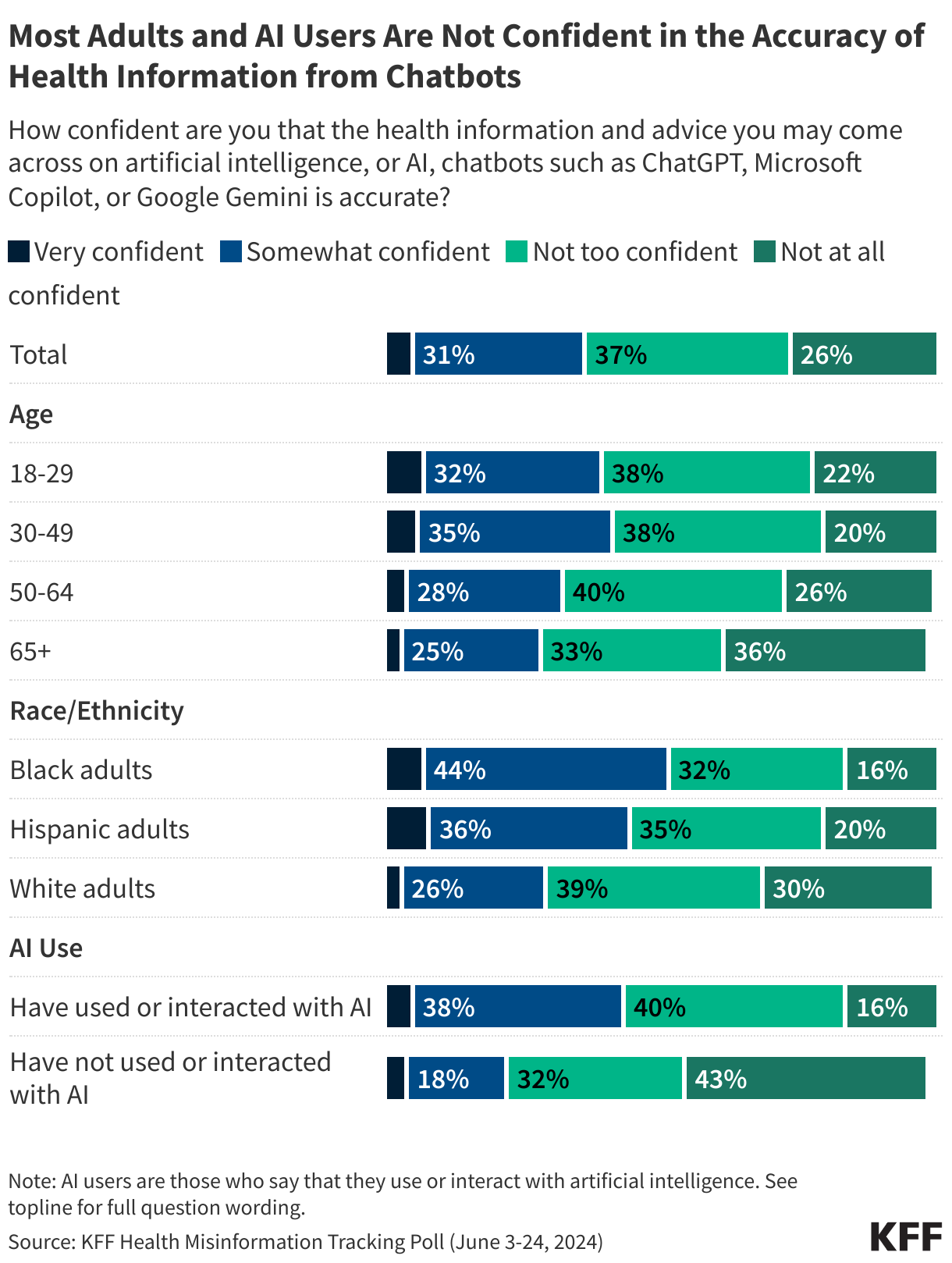

The public isn’t convinced the health information from AI chatbots is correct, the poll found. Only one-third of adults are confident in the accuracy of AI health information and advice. This is generally true across age and racial and ethnic groups.

Are AI answers helping or hurting?

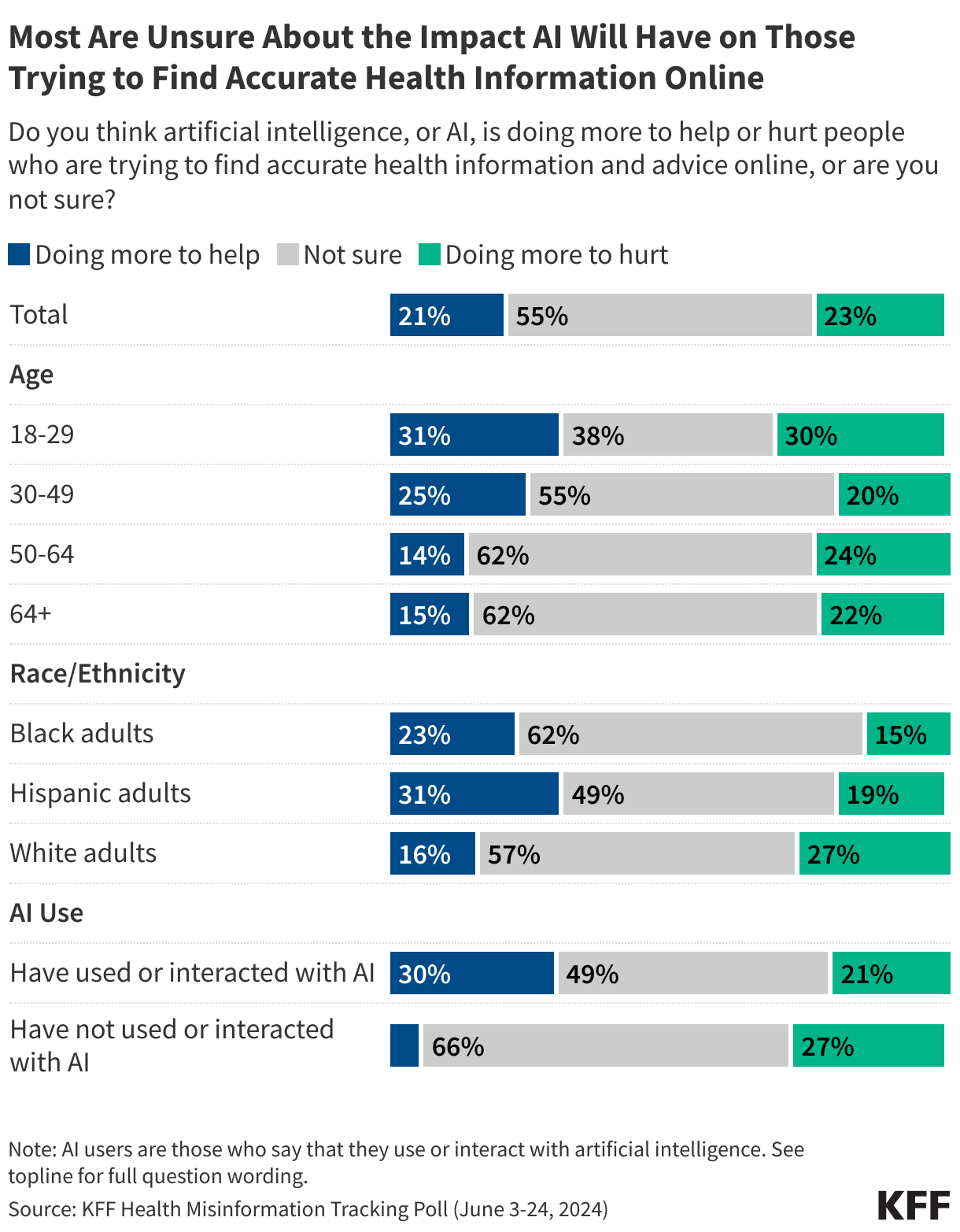

We’re still in the early stages of widespread use of AI technology by the general public, and experts still don’t know all of the benefits and risks of AI use, especially when used for health information.

Neither do most people: Half of respondents (55%) are unsure if AI chatbots will help or hurt people looking for accurate health information and advice. Even those who use the technology are unsure of what the impact will be — 21% say the chatbots are doing more to hurt efforts to find accurate medical information and 49% are unsure.

What this means for you

It’s important to exercise caution when using AI chatbots to search for medical advice and be aware of the inaccuracies these tools can spread. Your medical team of trained professionals is your best source of information relating to your cancer diagnosis or treatment.